QCon London 2014 - Embracing Change - Building Adaptable Software with Events

Building adaptable software is what we continuously aim at achieving during our day to day work. However, at the same time, it’s probably the hardest part in software development. Everybody of us already had that moment when your customer/project manager proposes you that new “easy” feature, that makes your hackles get up because you know it’s gonna be a nightmare to implement add it. Simply because your previous (apparently reasonable and well-thought) decisions hinder you. At this year’s QCon london, @russmiles aimed to address this problem. This post summarizes what I got from his tutorial.

In his training session “Embracing Change: Building Adaptable Software with Events”, Russell Miles (@russmiles) talked about building #antifragilesoftware. According to him, it’s not that people are creating bad software because they are not intelligent enough to build good one, it’s because they do not know what they have to think about at certain points during the development, about what parts of the software are most critical and when they have to be particularly cautious. They simply don’t have the right questions at hand.

What usually struggles people most when developing a piece of software are..

- people & skills

- to deliver value

- reinventing the wheels (no reuse)

- maintainable code

- overengineering

- bored with business problem and instead want fun, trying something new (-> reinventing the wheels ;) )

- integration with other software

- where to place which application logic

- …

These points can basically be distilled into

- building the right software (or not)

- building the right software right

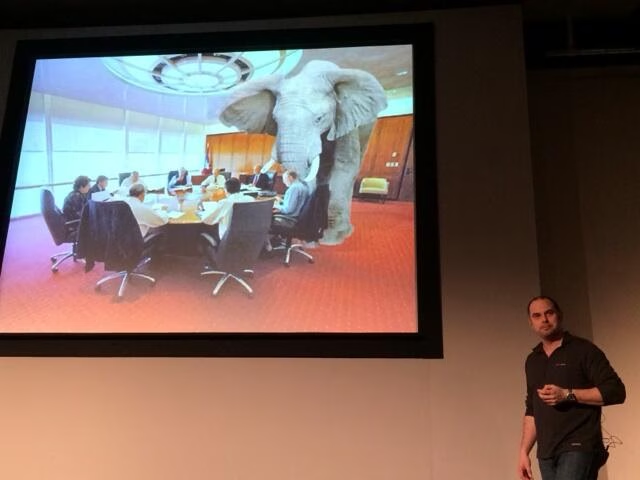

The elephant in the standup: […] in an agile project, …usually in sprint 10 the product owner enters the room with an apparently “small” change that’ll screw up your entire code base. Russ Miles

The problem is that all the decisions that have been taken in previous sprint plannings and/or stand-ups, are the enemy of future modifications and thus allocate to the so-called “elephant”.

Thus, you need to embrace changes, you need to find strategies for building a system that is able to react and adapt, which is antifragile to such problems that usually come along.

Simplicity is a balancing effect. You can run into oversimplification as well, which you get when your “simple” code doesn’t deliver any value to the customer.

Simplicity in the code base is when you can read and understand it by scanning through the class hierarchy and by reading/executing its tests, so Russ, “you cannot have simple code without tests”.

Coupling is your enemy

If you’re about to build a robust system, the first thing you’re looking for is what kind of coupling you have in your software:

- interface/contract sharing

- inheritance

- method names and return types

- method parameters and ordering

- programming language

- exceptions that are being thrown and caught

- annotations (which are currently quite popular in modern programming languages)

- …

The key is to understand the pace with with the different parts of the software need to evolve.

In order to understand the pace of evolution, it is important to gain a view on the architectural design, that helps to group things together that are intended to change together and usually have similar responsibilities. On the other side we need to decouple those areas that change at a different rate.

But watch out, nothing can increase complexity faster than by decoupling it, if you do not know what you’re doing. Therefore, don’t jump straight in! Other than many people belief, there is no one-size-fits-all solution. Don’t apply the “decoupling hammer”!

Instead, start with the lowest possible decoupling like using simple programming interfaces (in the sense of a C#/Java programming construct). Then evolve the decoupling when needed. Possible levels could be:

- Programming interface

- Events

- Message Bus

- Microservices

How does this impact my architecture?

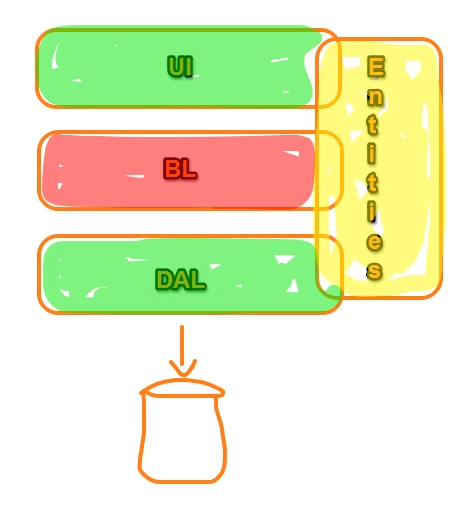

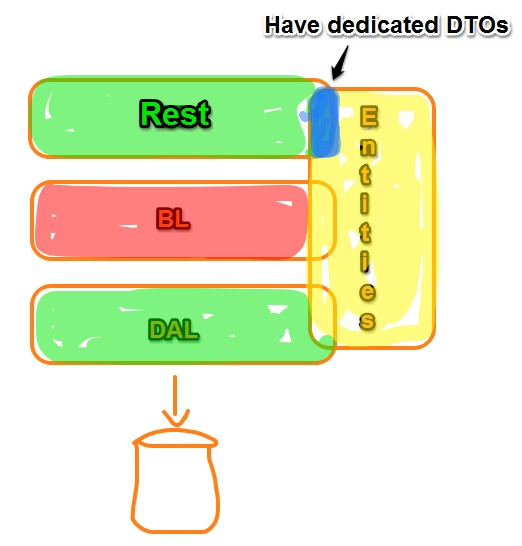

From an architectural point of view you basically start with (what Russ called) a “pizza-box architecture” (forgive the colors, but I’m currently trying out Evernote’s hand drawing feature and Skitch):

Each area is nicely separated and decoupled through interfaces, probably with some dependency injection mechanism which automagically wires the implementation and the interfaces together. This kind of organization imposes a nice structure and makes testing quite easy. It doesn’t help against the elephant yet, though.

The audience of your code is both, the machine and the human. Russ Miles

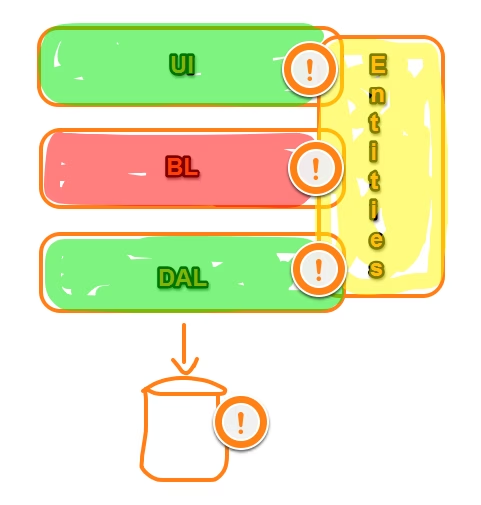

In order to be able to switch the next level of decoupling, you first have to identify the problematic spots of our pizza-box architecture. Where is the coupling? What happens if one area needs to change, which ones will I be forced to adapt?

Assuming you already separate the layers through interfaces, then normally the transversal parts of the classical three layer architecture are the most problematic ones. For instance the domain objects or domain entities. They are touching every area and thus blur the boundaries between the layers, which might be bad when you want to decouple things. Also watch out for annotations, typically from your ORM. They cause coupling as well!

Take for instance what happens if you change the database table?? You need to change the entities, which impacts on your data access layer (probably), your business layer and even your frontend layer where you (most probably) directly use the domain objects for the UI logic. I experienced these problems by myself when we switched to creating rich client JavaScript apps with a REST layer at the backend. Our initial “naive” approach to expose the the Entity Framework entities directly to the JavaScript client isn’t so ideal in the end. So we ended up with some DTOs on the REST interface side which decouples it from changes on the domain entities.

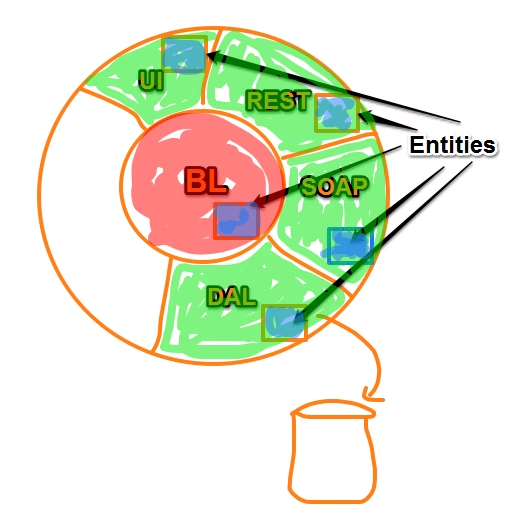

Going forward, we started to build what Russ calls the “Life preserver”. In a first step this means reorganizing our pizza-box diagram into a circle, a cellular structure basically where the core, our busines logic, resides in the middle. All of the components that interact with this core are integrations with the outside world, which are drawn in a second outer circle. As such, our diagram from before might look like this:

Note that even the DB is seen as an integration point because it happens to be the kind of interface where we (currently) store and read the application data. This is a particularly challenging point of view for many developers and architects as they often place the DB in the center of the software. But if you think it further it sounds natural. Tomorrow, it might not be any more a local DB but some remote service where (part of) the data goes to, who knows… The scope is to remain flexible and open to changes. Remember, the elephant!?

Q: But where are the entities now??

A: They're are being replicated among the different areas.

Q: You do what?? Duplication is evil! You should know about the DRY principle, don't you?

A: Sure, but as we've seen, high coupling is evil as well...what's worse??

Q: So you're saying I should duplicate the code among the areas rather than sharing it?

A: If you need to decouple areas from each other, then yes! Duplication among areas is fine whereas it is definitely evil when being done within areas!

For integrating the different areas we have to have some mechanism that allows our data to flow across them. Possibilities are:

- Adapters - ..and mappers that convert between the entities of the different areas.

- strings - as a data exchange format rather than fully typed objects. They are probably the loosest coupling we can create, right? A string can contain anything from a simple value, a CSV series to a fully structured object graph serialized in JSON (for instance). You might want to pass your data as strings between the areas rather than strongly typed objects.

- Event Bus - maybe..(for instance: http://stackoverflow.com/questions/368265/a-simple-event-bus-for-net)

Unfortunately there wasn’t enough time to further elaborate on these things during the training session at QCon. He is working on a Leanpub book though (currently in alpha) which aims at outlining these concepts in more detail: “Antifragile Software”.

Conclusion

This kind of architectural outlining isn’t totally new. They’re called hexagonal architectures and you find plenty explanations on the web:

- http://alistair.cockburn.us/Hexagonal+architecture

- http://blog.8thlight.com/uncle-bob/2012/08/13/the-clean-architecture.html

As my tweet mentions,..

seems like @russmiles goes in the direction of an "onion arch" I was trying to get around a couple of month ago https://t.co/O1GlUrQn0q

— Juri Strumpflohner (@juristr) March 4, 2014..I started to think about them a while back, but didn’t go much ahead due to other stuff that came into my way. My thoughts, trials and reference information so far are on GitHub. However note that especially the code part there is no more up-to-date and should not be taken as a reference. My idea would be to try to implement these aspects in a sample application once I have a bit of time available.

If you’re interested, Uncle Bob Martin enters quite into the details about hexagonal architectures in his blog post. There’s also a Youtube talk available where he outlines his ideas.

So my takeaways:

- only go as far with the decoupling as you really need

- decoupling means increased complexity but higher maintainability; find the right balance for you

- don’t decouple all the time, there is no one-size-fits-all solution but you need to know the concepts and apply them at the correct level, at the right moment, when the situation requires it.

- the hexagonal architectures or “life preserver” approach facilitates an architectural view and thinking which is more focused on aspects of change and speed of evolution of your software

I’ll keep an eye on this, so there’s more to come…